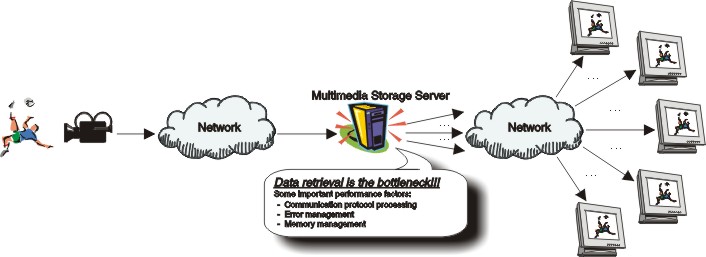

Figure 1: Application scenario.

Traditionally, when transmitting data between end-systems, we have to handle in the OSI reference model all seven protocol layers (Figure 2) and in the Internet protocol suite all four protocol layers, while intermediate systems - or nodes - have only to handle the three lowest layers. The bottleneck in communication is located in transport and higher layer protocols where for example checksum calculation is among the most time expensive operations. Thus, for each client the same data must be processed through all the end-to-end protocols performing the same CPU intensive operations.

|  |

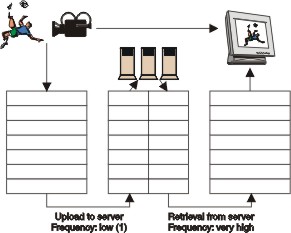

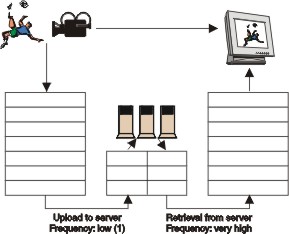

| Figure 2: Traditional data storage in a server. | Figure 3: Network level framing. |

The advantage of this approach is that the CPU intensive end-to-end protocol handling is not necessary - respectively reduced to a minimum - at the intermediate storage node. This results in a reduced load of CPU and system bus, i.e., in other words, more consumers (clients) can be served with the same hardware resources.

However, this gain does not come without any disadvantages. As we also store packet headers, more storage space is needed and more data must be retrieved from slow disks. This overhead varies with the packet size, e.g., storing a 1KB UDP packet requires 20 bytes extra of storage space for the header (an increase of about 2%). Nevertheless, when transmitting multimedia data larger packet sizes are often more appropriate making the overhead less. The disk I/O might also be a problem as the amount of data increase, but as the disks are getting faster and the data is stored in a disk array, the problem might be practicable. For example, the overhead retrieving a stored UDP packet of 1KB using a Cheetah disk (about 10000 RPM) would be minimal. Furthermore, in a multi-user scenario, a packet might be cached and the gain would then increase further.

So far, this is in the design stage, and we have not yet tested the gain in CPU processing versus the increased storage space and disk IO requirement. However, we think it is an interesting idea, and would like to test such a system.